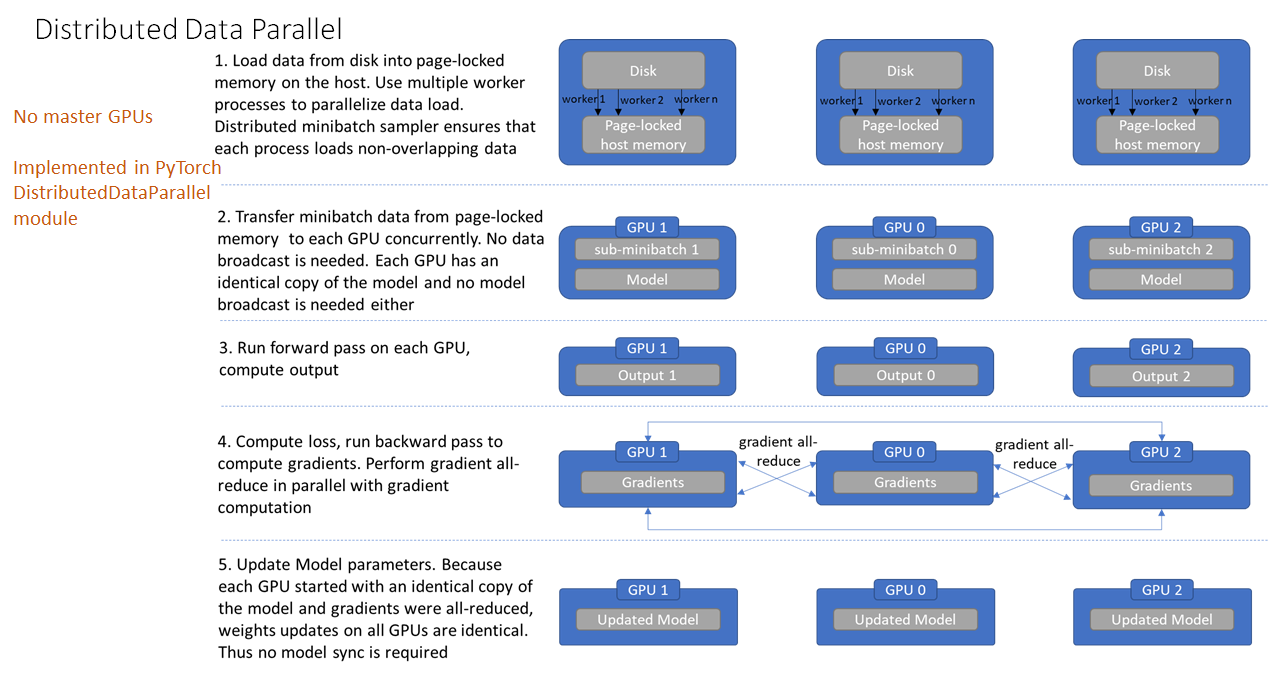

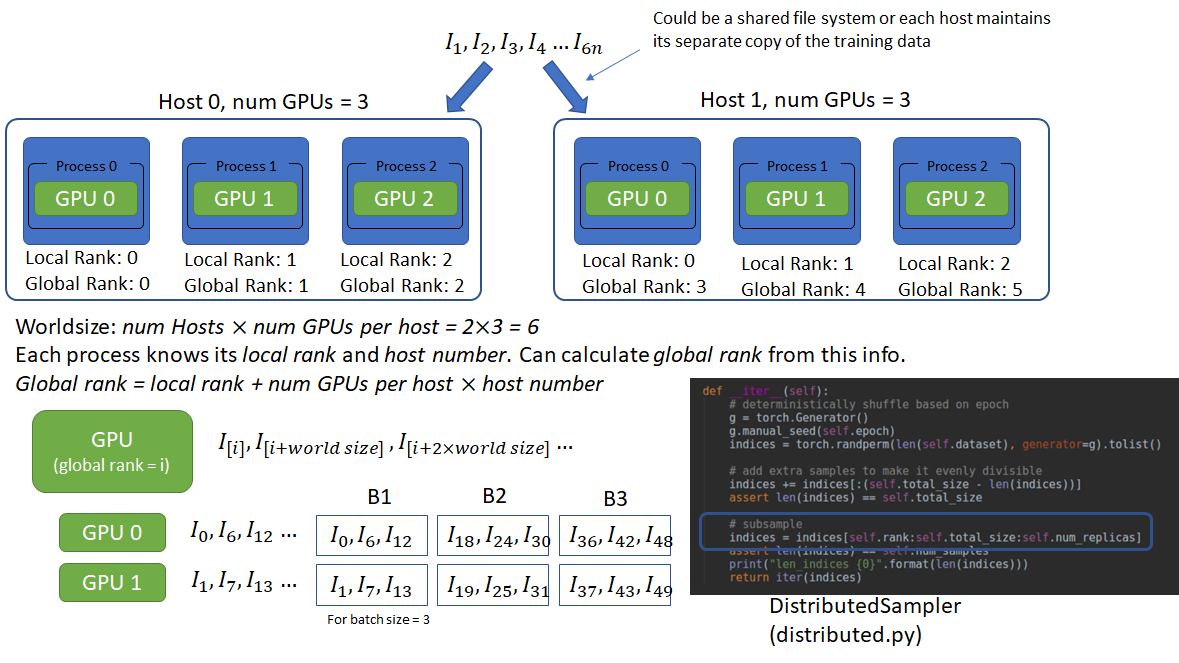

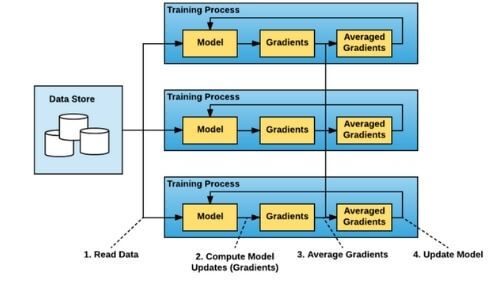

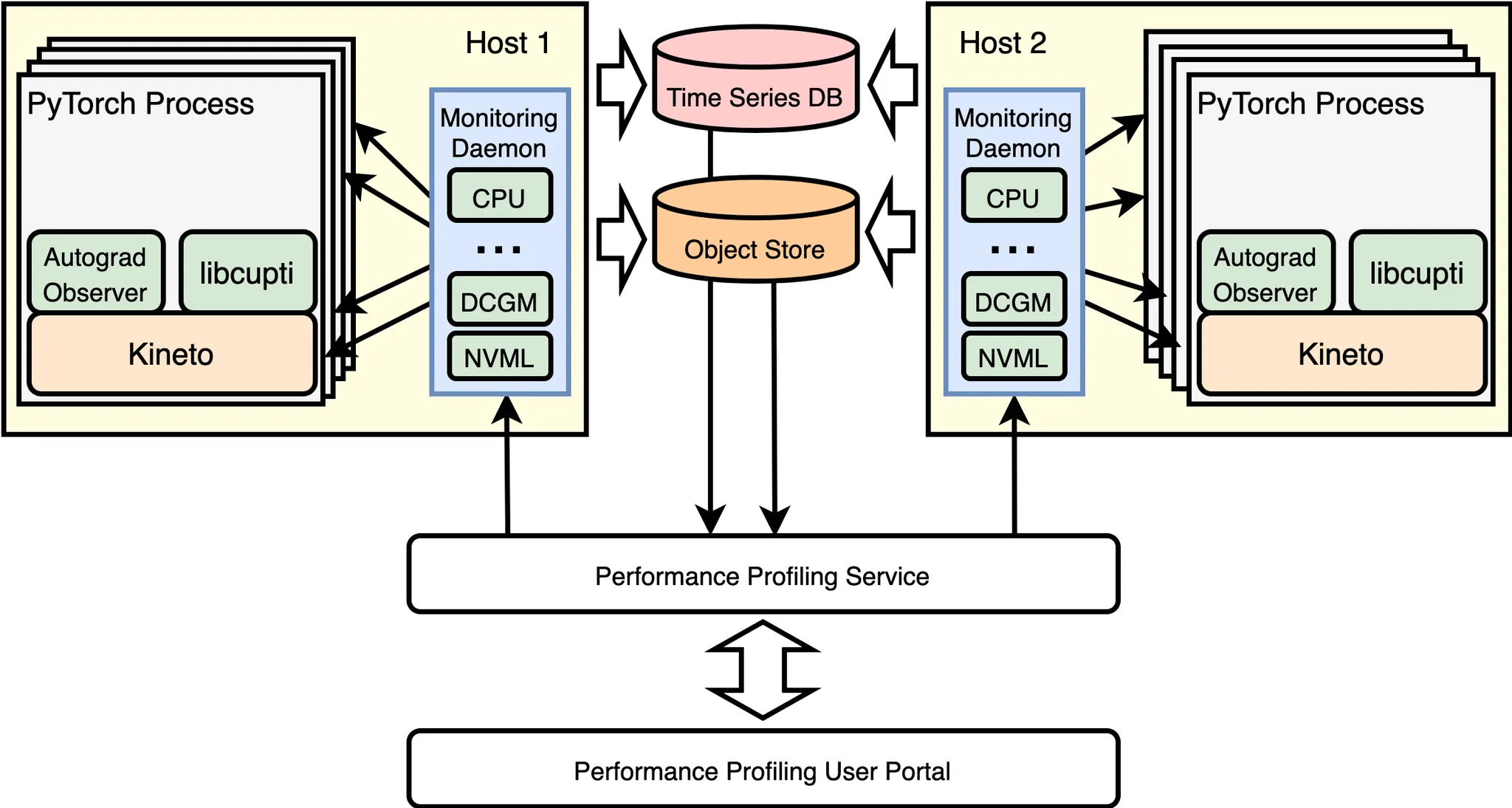

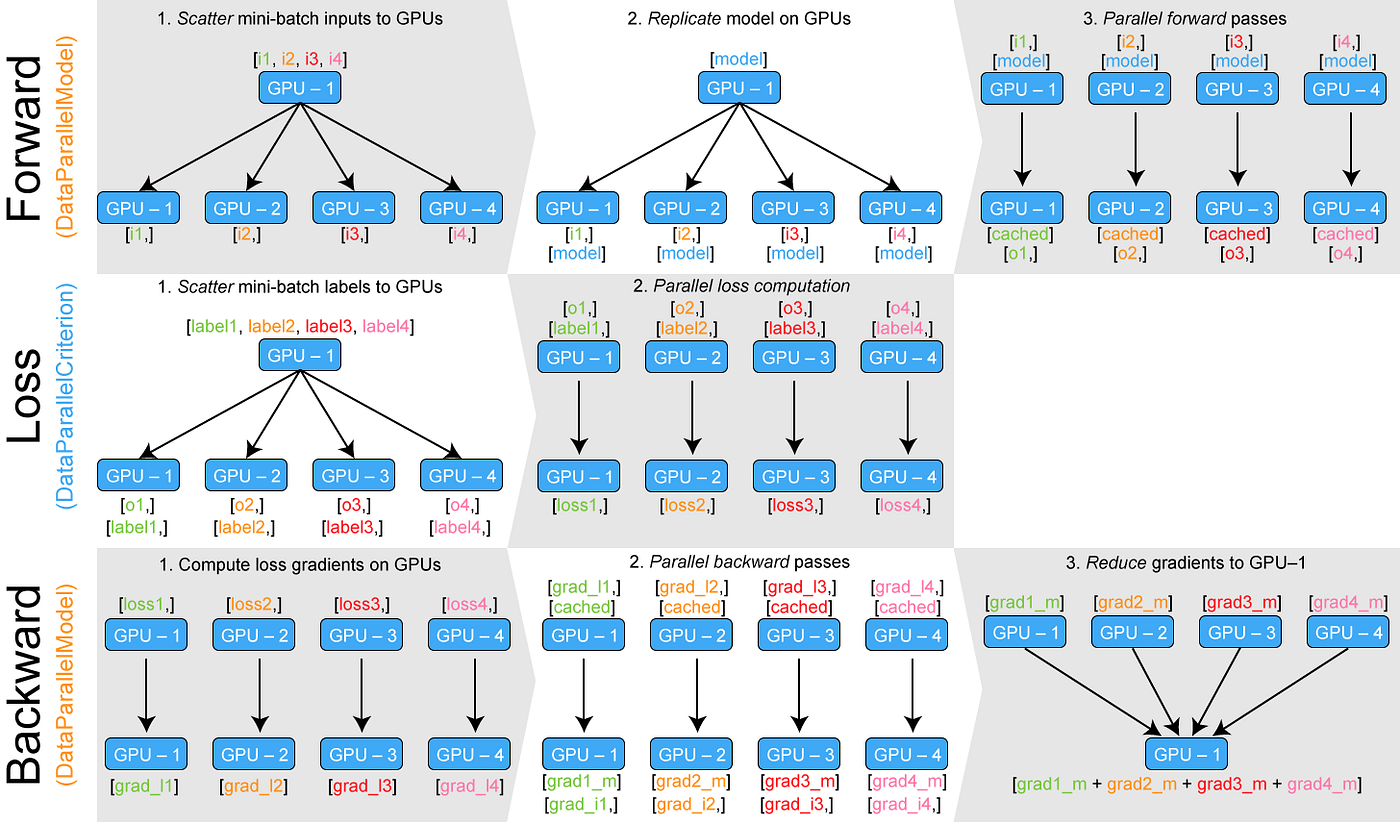

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

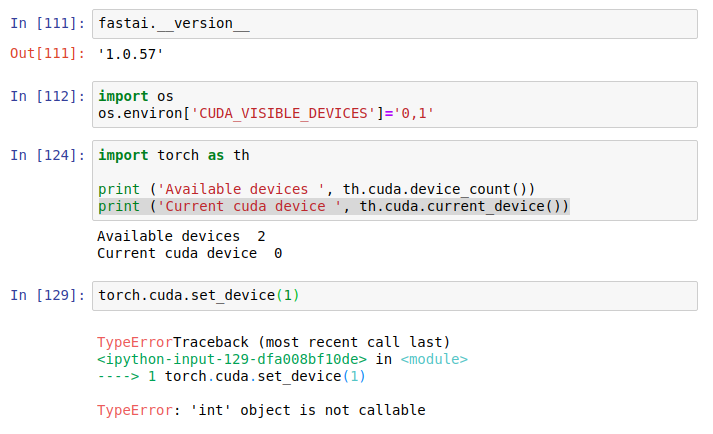

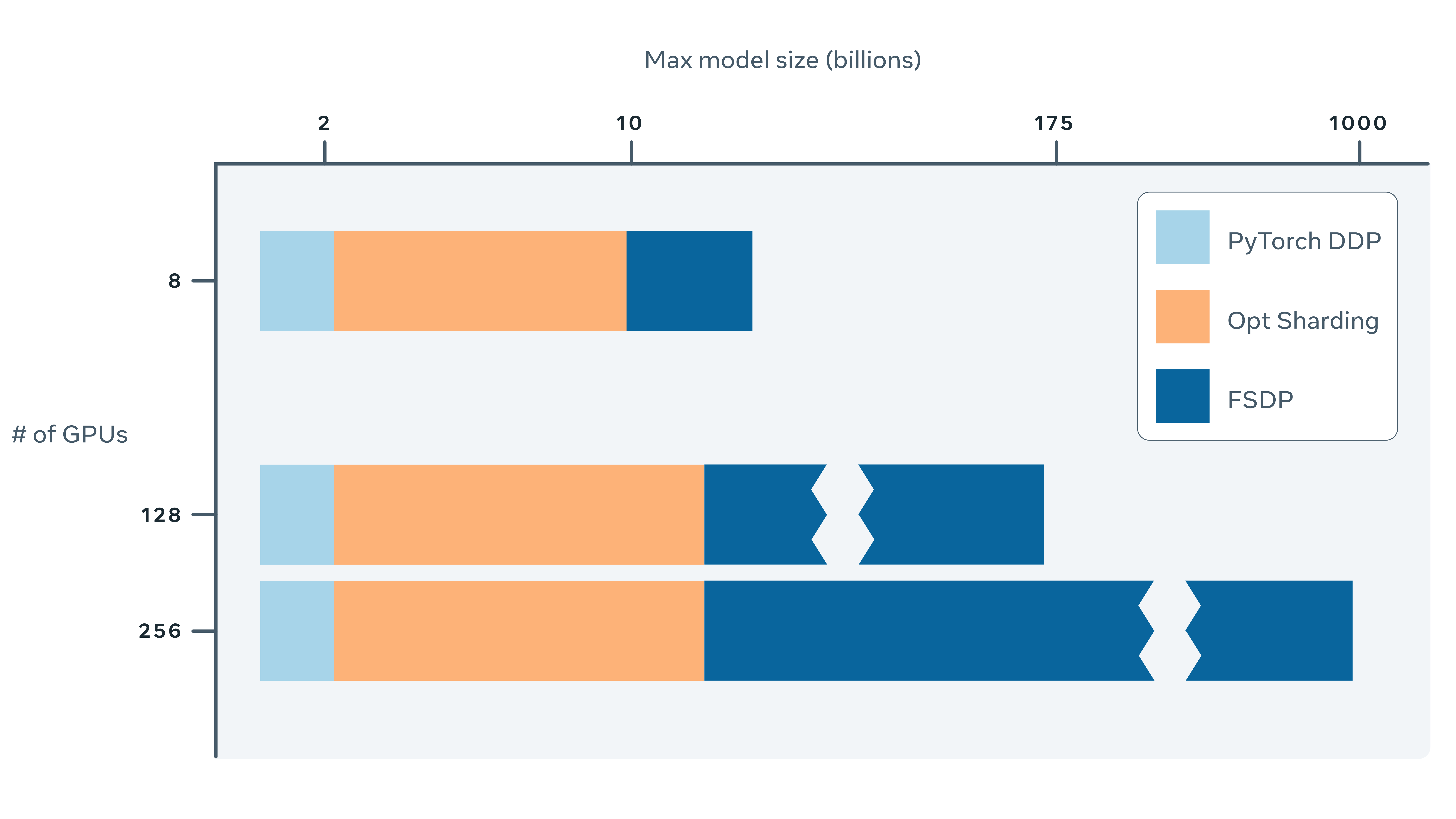

💥 Training Neural Nets on Larger Batches: Practical Tips for 1-GPU, Multi- GPU & Distributed setups | by Thomas Wolf | HuggingFace | Medium

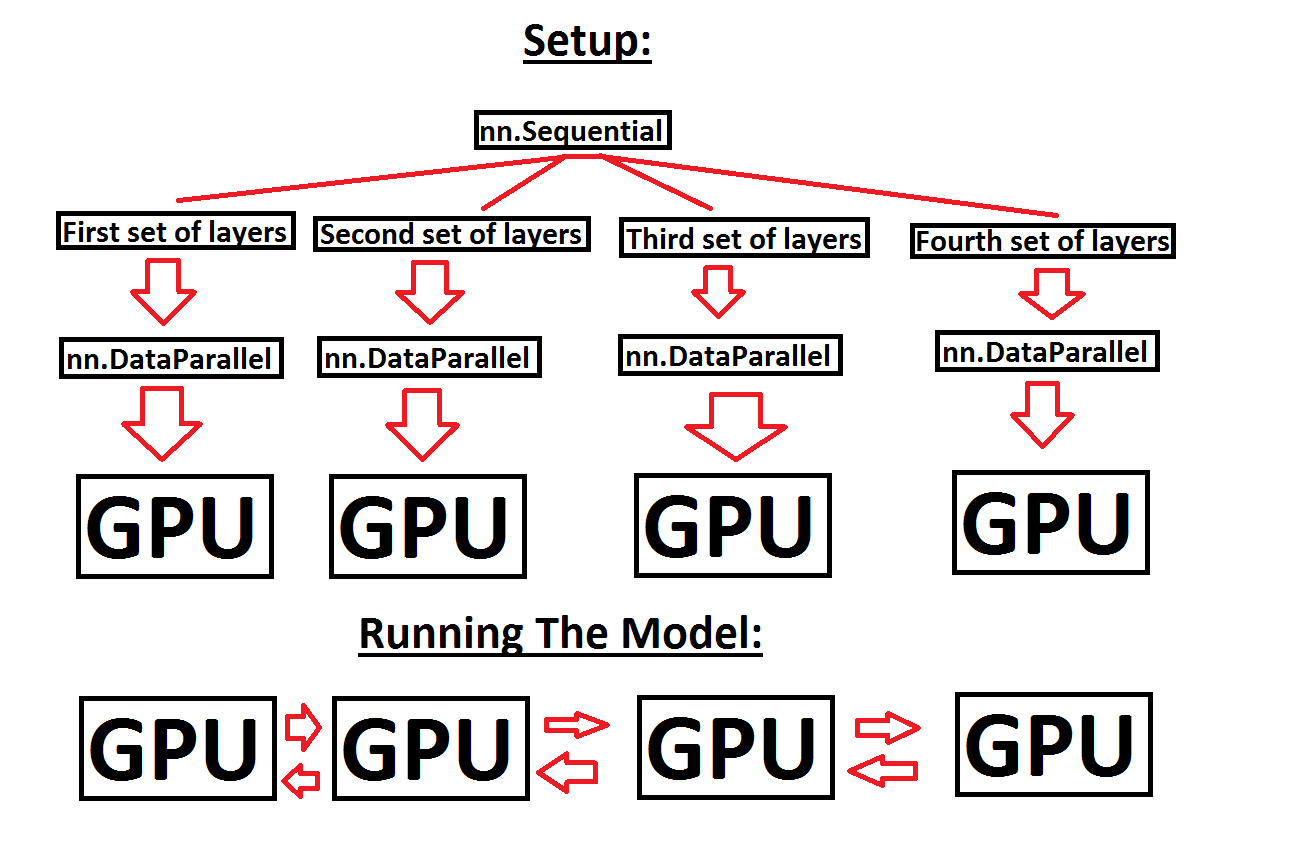

Help with running a sequential model across multiple GPUs, in order to make use of more GPU memory - PyTorch Forums